Triclops is another one of my incomplete projects. It started while I was a freshman at Milwaukee School of Engineering. My goal was to build a Spinbot; a single motor robot that modulates its speed to drift toward its target. Behaviorally the robot is designed as a Photovore where its target is the brightest light in a room.

The Github repository is here. Note, that it is not well maintained and probably never will be given this is an abandoned project.

Attempt #1

The following is my first attempt at making a Spinbot along with some of the old notes I wrote in the original blog (home-built-robotics).

When re-kickstarting my blog (for attempt #2) I starting to document Triclops.

So first, it has been a while since my last post. Partially because of my latest creation, and partially because of work and now college. So first a little about college, now a freshman at MSOE, going for a computer engineering degree. Classes start next week, it has been orientation all week. So hopefully I can somewhat retool this blog to also cater to my projects I will be doing at college.

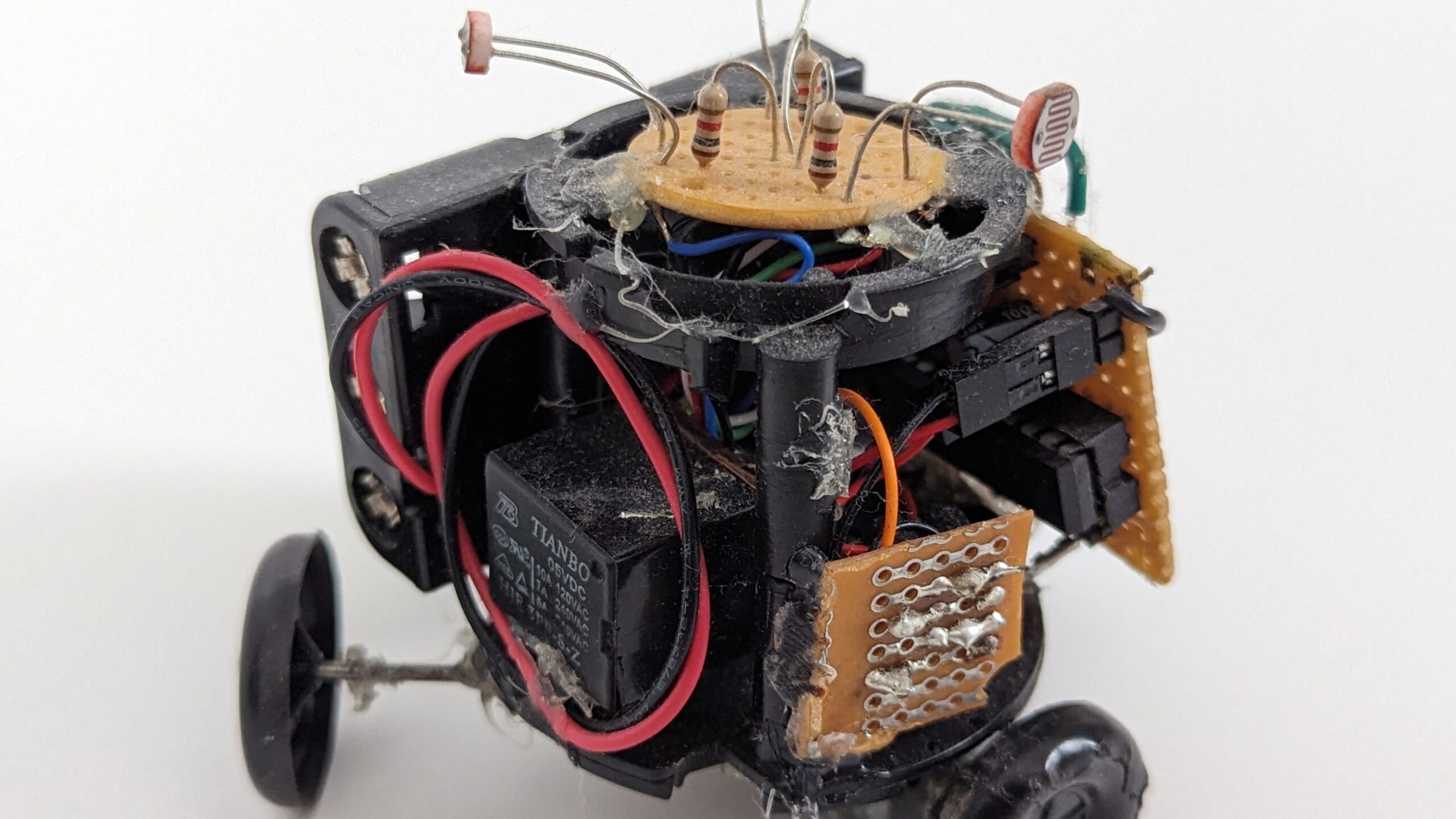

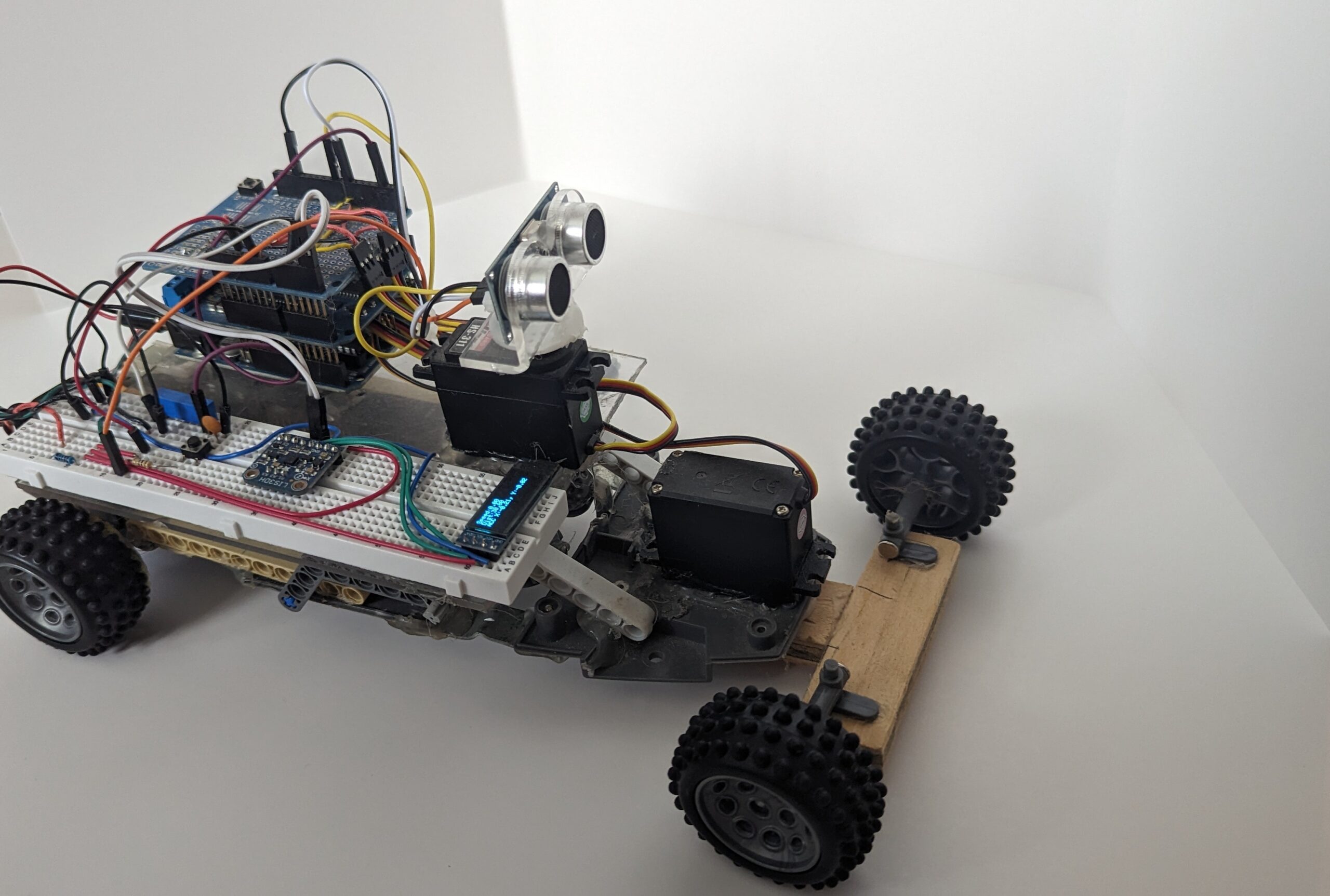

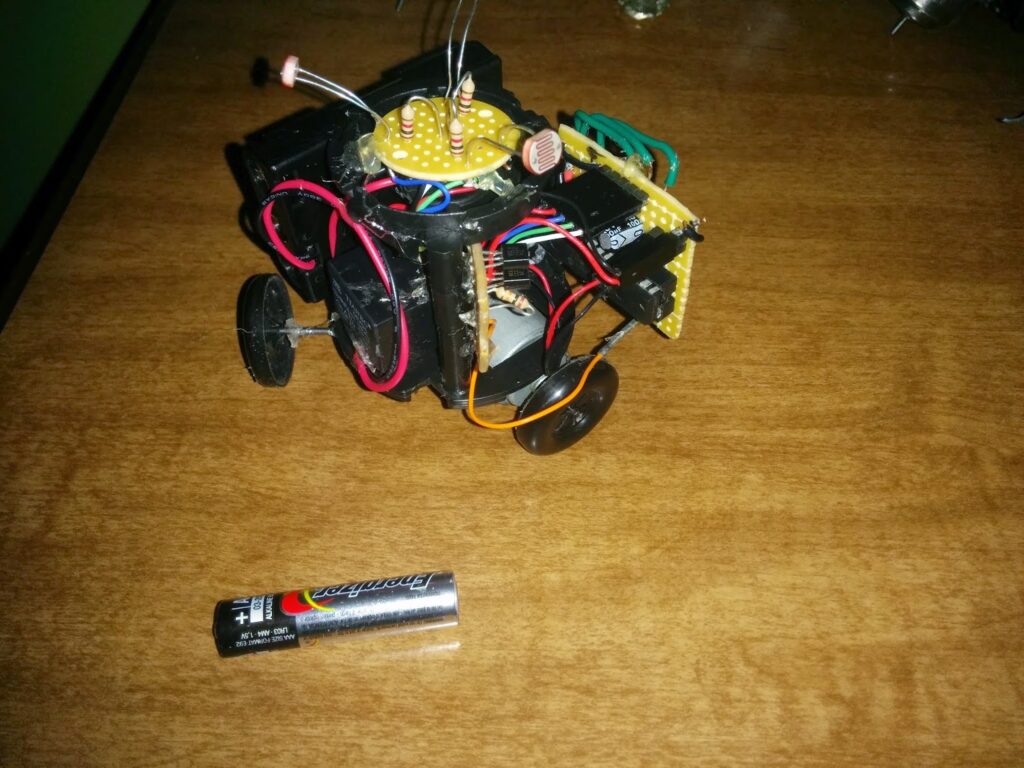

Triclops the Spinbot with AAA battery for scale. Triclops is a single motor photovore with three light sensors and a neural-network control policy.

Triclops has one motor for output and thus can only spin in a circle. By pulsing its motor fast enough (a.k.a. PWM) it adjusts the radius of the circle around some point in space (center of rotation). If the drive wheel moves slowly the distance between the robot’s center of rotation and center of mass is larger and if the drive wheel moves significantly faster, the center of rotation shifts toward the center of mass. Dynamically adjusting the PWM lets the robot modulate an arc and thereby drift in a target direction.

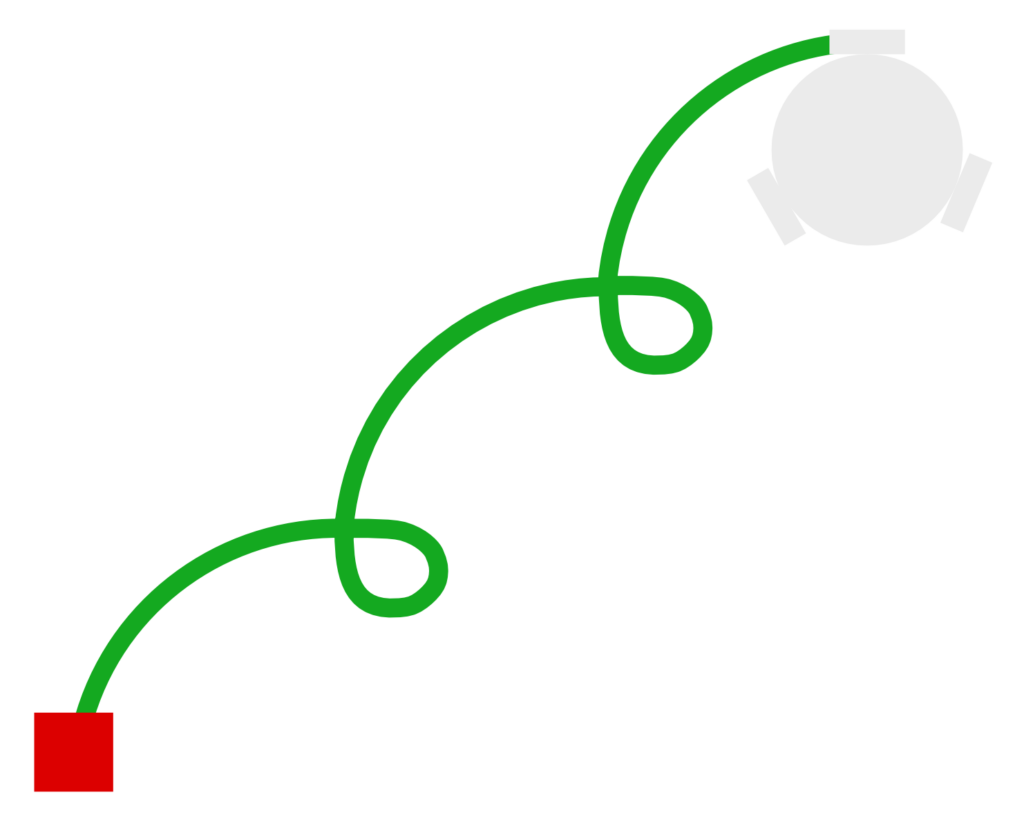

Below is a diagram demonstrating how I envision Spinbot to behave.

Sketch of example trajectory for Spinbot to a target (red cube). It needs to spin slower for arcs that approach the target and spin faster to stay around the same point for the next approach arc.

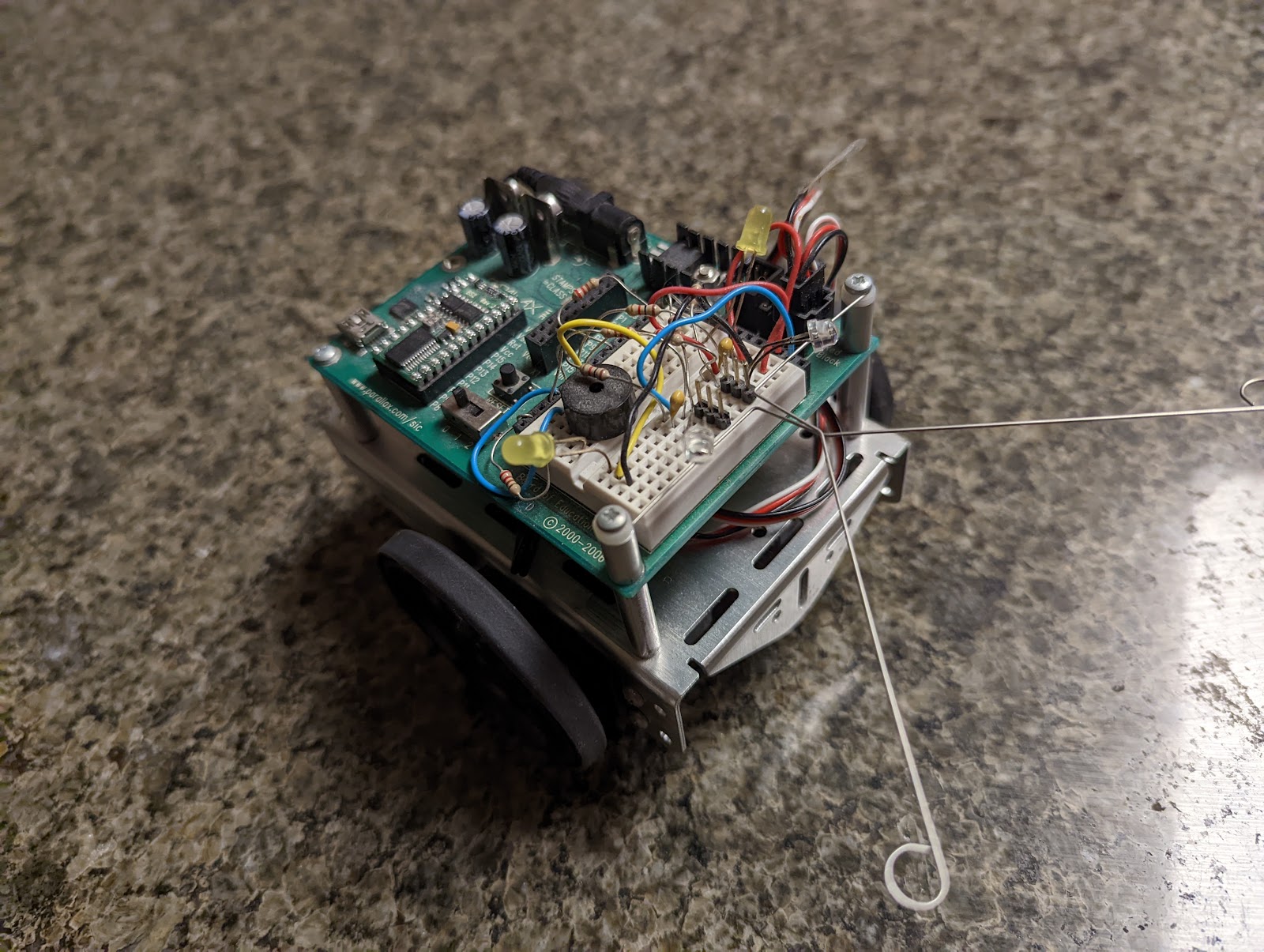

For sensory input I used three CdS photo-resistors that provide analog values for a PICAXE microcontroller. The PICAXE runs a variant of BASIC (different than the Parallax BOE-Bot BASIC) on top of a standard Microchip PIC microcontroller.

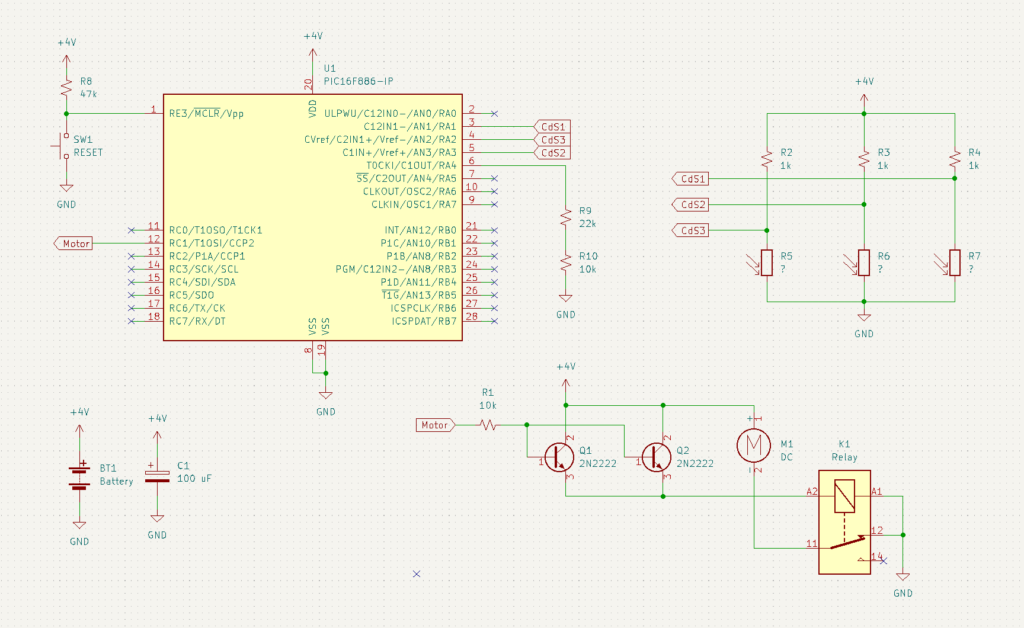

The following is the schematic capture for Triclops the Spinbot.

Schematic Capture for Triclops the Spinbot.

Worth noting a couple oddities in the circuit. The two resistors R9 and R10 in series is was selected as it was close to the target 35k ohms and I had these on hand. The parallel transistors Q1 and Q2 were to provide enough current to drive the relay I salvaged from an old toy.

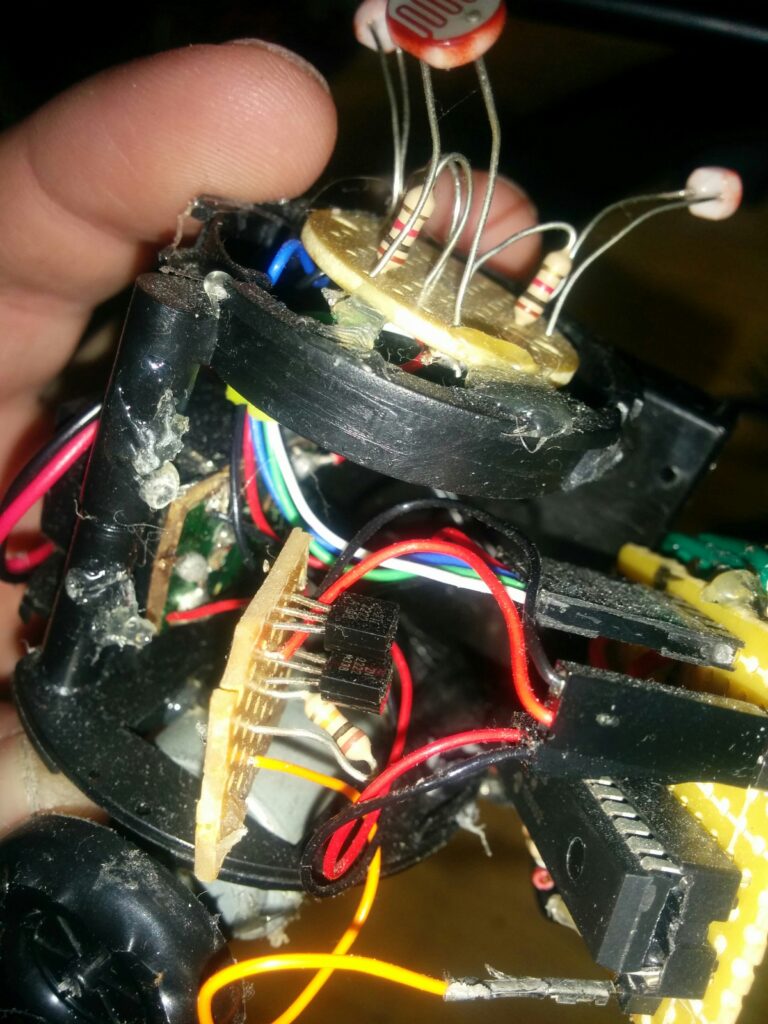

I soldered the circuit on some spare protoboard. It was a bit less of rats nest than some of my older robots but not by much.

Mechanically, it was a mess of hot glue and salvaged parts. The motor and wheels were from old toys. Note, I had to use electrical tape on the drive wheel as the “rubber” to increase friction. The chassis was salvaged from the same plant trinket used for my BEAM robot SymFlower.

Various views of Triclops’ construction. The breadboard PICAXE carrier itself turned out nice though.

The code (magic) of the robot is how the three sensors are able to control that one motor so that it tracks the brightest light source. Not wanting to do all the math to figure it out myself, I used a single artificial neuron in code trained to recognize when it should activate the motor. Effectively a bang-bang closed-loop control policy.

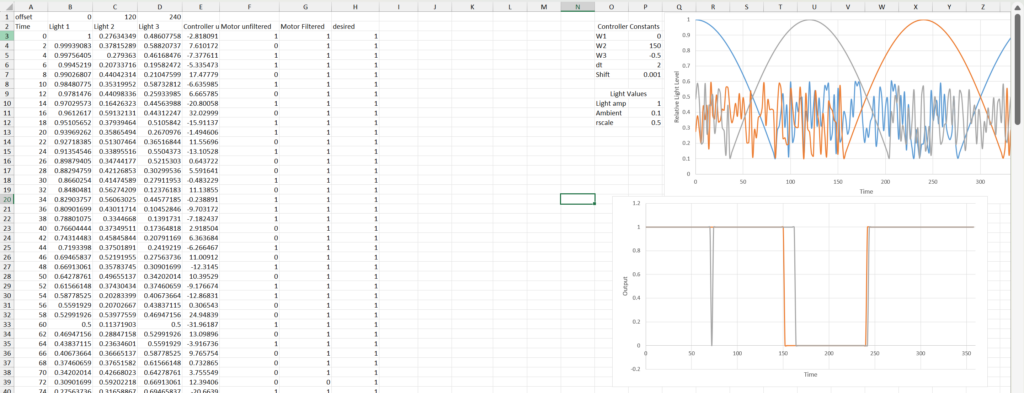

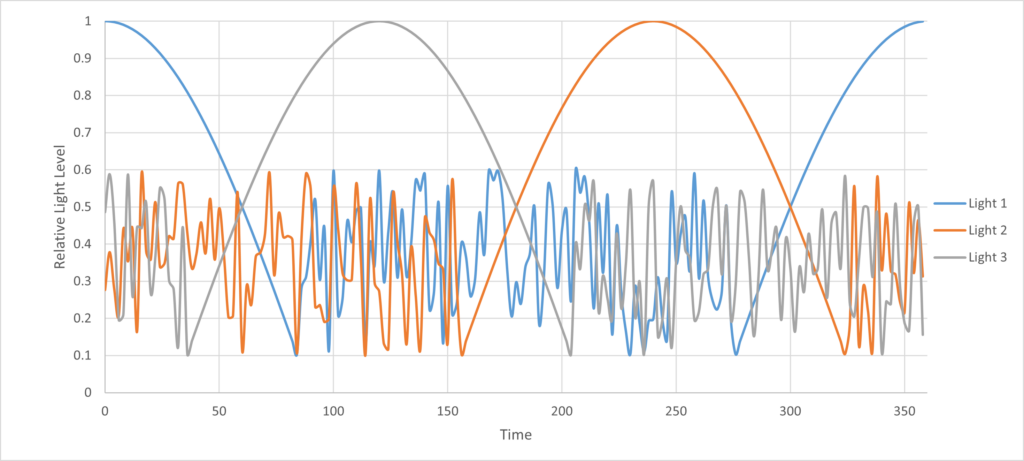

I manually “trained” this policy using Microsoft Excel. I assumed a single ideal light source that effectively is a shifted sinewave for each sensor. When sinewave is negative the sensor reads noise.

Screenshot of Excel with a manually trained neuron. Left side shows the input / output table alongside the desired value. Center shows the weights and control variables. Right shows graphs of inputs and outputs.

Close-up on the input graph.

Plot of input sensors with ideal values for a portion of the sinewave where the sensor faces the light source and random noise when away.

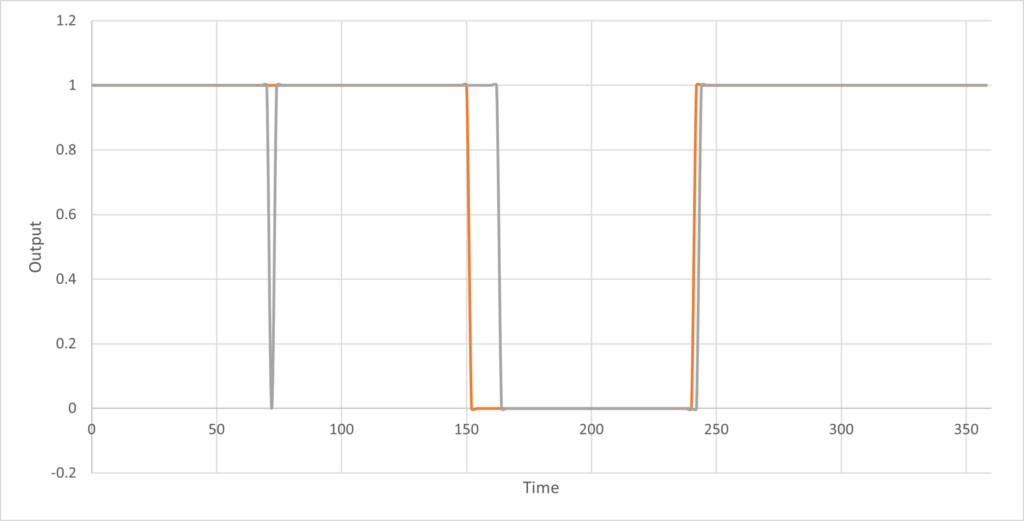

And the output graph with ideal and actual output.

Plot of motor control output given the control table in Excel. Orange is ideal control. Grey is output of the control neuron. Close enough for testing in hardware.

This would have been fairly simple to do in an Arduino, but because the PICAXE microcontroller I selected does not support floating point values natively (which is how neurons are canonically presented in textbooks). So I thought, what about modulus? Its when you divide two values and keep the remainder as the output. I rationalized that decimal values between zero and one are near equivalent to the remainder between 0 and 65535.

Taking inspiration, I wrote the following code.

'set variables for reading adc cds cells, and neural model memory

symbol sense1 = w1

symbol sense2 = w2

symbol sense3 = w3

symbol bias = w4

symbol neuronSum = w5

symbol cds1 = 1

symbol cds2 = 3

symbol cds3 = 2

'models a single neuron as described above taht was trained to

'determine whether to turn on or off the motor in regards to

'the current light level

main:

'get analog values from cds cells as byte

readadc cds1, sense1

readadc cds2, sense2

readadc cds3, sense3

'convert from byte representation to precent

let sense1 = sense1 * 100 / 255

let sense2 = sense2 * 100 / 255

let sense3 = sense3 * 100 / 255

'assign weights to inputs

let sense1 = sense1 * 649

let sense2 = sense2 * 131

let sense3 = -1000 * sense3

let bias = 15000

'sum weights and apply activation function

let neuronSum = sense1 + sense2 + sense3 + bias

let neuronSum = neuronSum / 400

'if past threshold then trigger motor

IF neuronSum > 0 THEN

high portc 1

ELSE

low portc 1

ENDIF

pause 5

goto mainIn the first attempt the robot was unstable. In order for it to stop ramping up its speed, I subtracted the previous weighted speed from the summed weighted sensors and then the neuron decided if it should fire the motor or not.

So now I am in the tuning stage. I plan on taking a video of it once I go back home (there never was a video and not much tuning).

Result of attempt #1 was a robot that could spin in a circle and sort of drift but definitely not smart enough to be a proper Photovore. The robot needs more neurons and perhaps finer motor control.

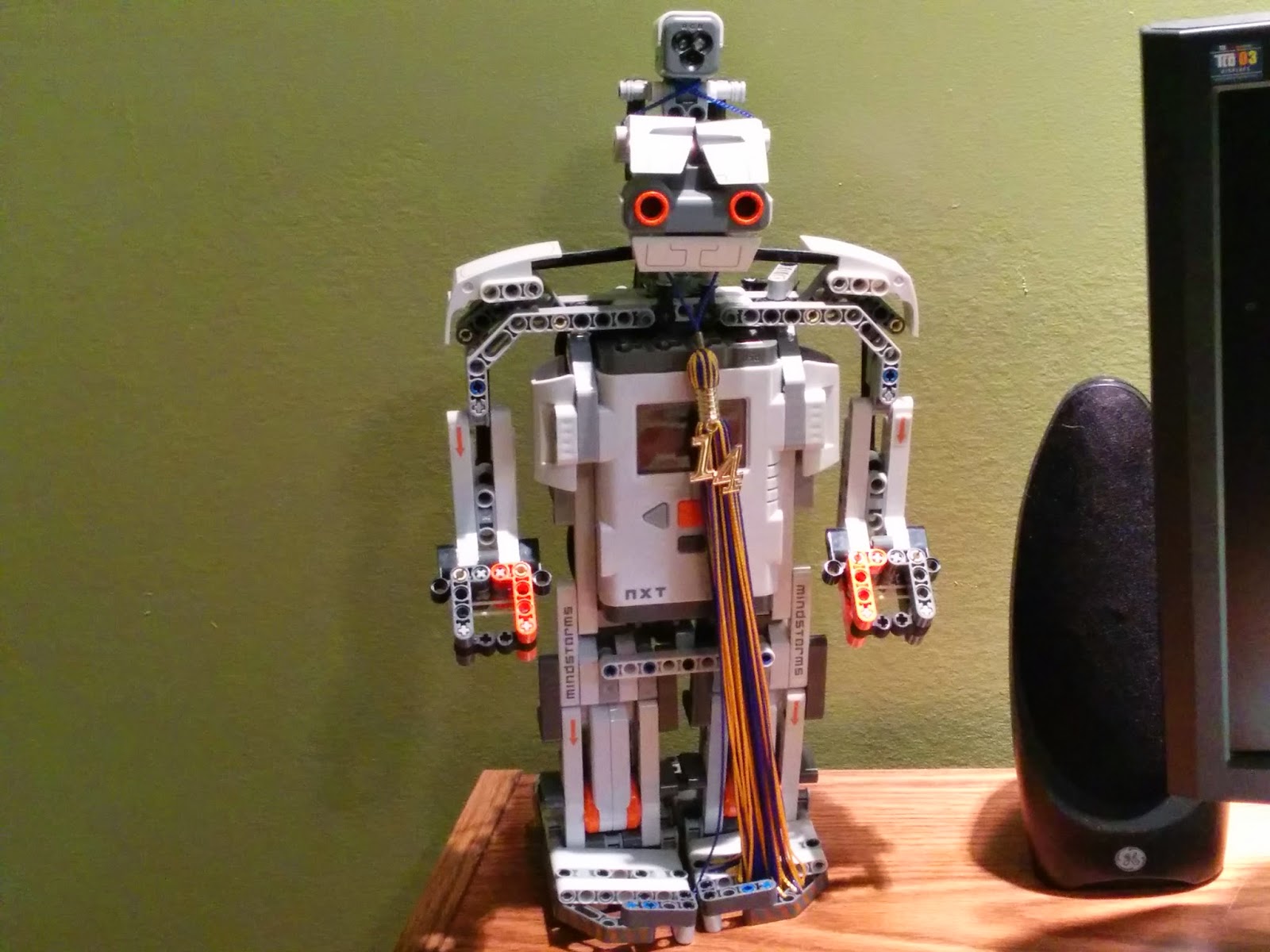

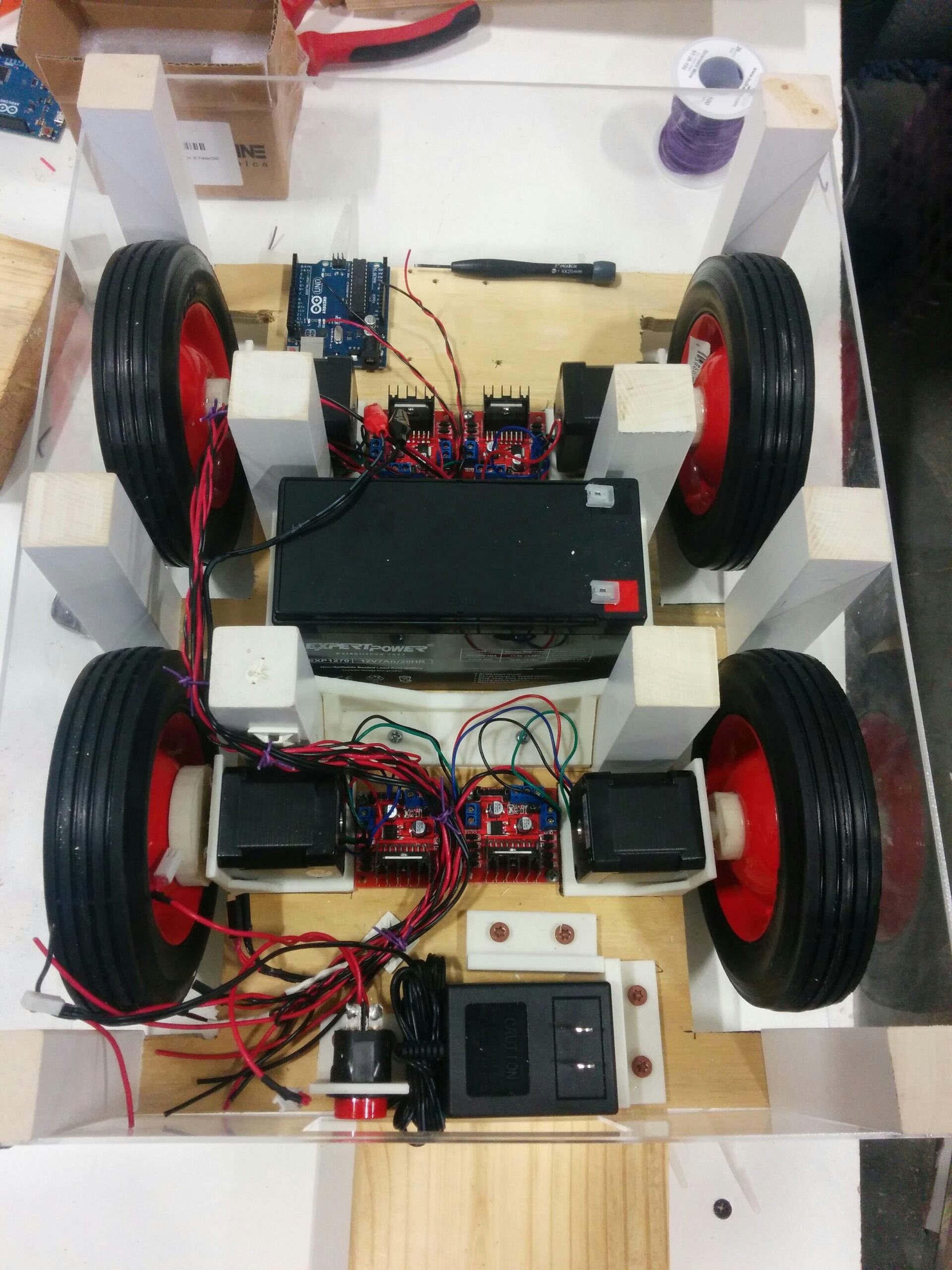

Triclops alongside some of my other robots: NoBB (behind) and Beetle (right).

Attempt #2

The summer before grad school at University of Wisconsin – Madison, I revisited this project with a focus on improving the neural network controller.

Simulation

I decided to build a simulation in Unity (Game Engine) to model the rotation physics. The simulation consists of the robot model, ground plane, and a target cube.

The robot was modeled with three wheels providing friction between it and the ground plane, with one of the wheels being the drive wheel. The drive wheel applied a force against the ground plane; thereby propelling the robot. Gravity was set to default. A simple script could then modulate the force and thereby induce variable arc radii.

Next was designing the target and sense system (represented as a red cube). The cube itself is a non-interactable object that is randomly placed in one of the quadrants relative to the robot (the robot always starts at origin). The robot has “sensors” above each wheel that detect how bright the cube is. Brightness is a function of the angle from center and distance from the sensor. Noise can also optionally applied to improve the real-ness of the reading.

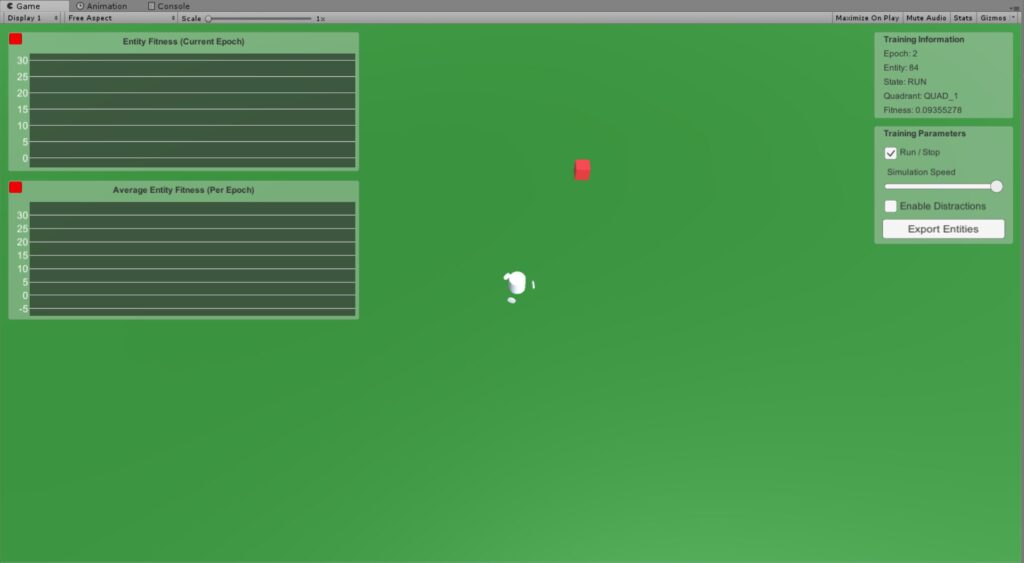

Lastly, as shown in the image, I built a simple UI to help diagnose the training; though it seems to have broken between the years 2018 to 2025.

Unity screenshot of Spinbot controller training where robot is white cylinder (with wheels) and target is red block. Left are graphs for real-time entity / population fitness (though this seems to have broken). Right is the UI for the simulation.

Controller & Training Algorithm

With the simulation setup out of the way, let’s discuss the controller.

The controller is a standard shallow neural network with an input layer, hidden layer, and output layer.

- Input Layer = 9

- Input layer is a bit larger than one would expect given there are three sensor inputs. Instead of just piping in the current sensor readings, each sensor has a current value and two previous values. This gives the network a bit of memory about its environment.

- Hidden Layer = 5

- Hidden layer was five neurons after some manual tweaking. I want to keep this number lower since it would be implemented on the PICAXE.

- Output Layer = 1

- Output layer being one neuron makes sense. The motor is either on or off.

I decided to use an evolutionary algorithm to train the neural network. At its simplest, its a collection of neural networks the successively get selected by their fitness.

A couple things to keep in mind:

- Each neural network can be represented as a string of weights; this is its genetic code

- Neural networks can breed in pairs; the genetic code is a combination of the two parents

- The genetic code also has a chance for random mutations during breeding

Testing neural networks for fitness happens within an epoch (a generation of neural networks). Each network has the same amount of time to run in simulation where it attempts to hit a target in each quadrant. Fitness is (roughly) the closeness to each target in the least amount of time.

After an epoch (set to 100 neural networks), there is a subset that pass along directly into the next epoch (10 best performing nets). The rest of the networks for the epoch are filled with breed pairs of these fittest parents. Note, the first epoch is special since all networks are randomly generated. The hope is eventually, there will be an epoch with a neural network suitable for Spinbot’s controller.

I took heavy inspiration from this YouTube tutorial on neural networks with evolutionary algorithms: video link. Highly recommend watching for a better explanation.

Results

After setting up the simulation and writing the neural network training code, I was able to collect some interesting models. These were exported into a JSON file for further analysis.

This is where the project stalled for attempt #2 as classes started for the fall term and the project dropped off.

Qualitatively, the simulation proved the Spinbot concept and validated the general controller training plan. I observed several networks that were able to modulate their arcs to hit targets in multiple quadrants. Though I suspect difficulty in directly porting these models into the PICAXE (due to differences between the simulation data and real-world sensor input / motor control).

Photoshoot

I did a photoshoot with some of my robots. Here is the gallery for Triclops the Spinbot.

Closing Thoughts

Projects can be started with the best of intentions but it doesn’t always lead to a complete (or near complete) robot. Sometimes its the lessons learned that are the takeaway. In this case the neural network & evolutionary training algorithms learned were useful takeaways for future projects.

I would like to come back to the Spinbot idea. Here is a short list of what I would do for attempt #3.

- Build a new hardware platform that is easier to configure and control.

- Microcontroller should be modern and have wireless onboard.

- Perhaps an ESP-32 if we want a small project.

- Or a Raspberry Pi if we want to do something fancy.

- Regardless, being able to send firmware updates / model updates wirelessly would be rather important for tuning the controller.

- Motor control should be a standard PWM driven module instead of a relay.

- Microcontroller should be modern and have wireless onboard.

- Choose a coordinate frame.

- Light sensors are interesting due to their low-resolution. When the robot is spinning, they also define a local polar coordinate frame of reference for the brightest location.

- This seems to only require light sensors.

- Alternatively, if we went with a cartesian coordinate frame it would be natural to steer the robot with a joystick or define navigation missions.

- This requires some way of knowing where the robot orientation-wise to its original position (or a predefined room coordinate).

- If we can sample fast enough, perhaps a gyroscope through a Kalman filter would be sufficient?

- Alternative, we could go more exotic with a visual-inertial odometry.

- Light sensors are interesting due to their low-resolution. When the robot is spinning, they also define a local polar coordinate frame of reference for the brightest location.

- Train a control policy.

- I like the direction I started down with the Unity simulation and neural network & evolutionary algorithm.

- Perhaps a more straightforward way to create a control policy for this robot is to implement reinforcement learning.

- Final tuning of the algorithm needs to happen on hardware.

- More complex simulation scenarios for best fit models is probably needed to reduce likelihood of overfitting (such as to the quadrant task).

To quote myself on the old draft of this post:

Well if any of you have comment out there in the wide web internet realm please drop me a comment. Till the next post be safe and go out and build something! :p